DDAS - Tricking Dynamic Deep Neural Networks on The Edge: Adversarial Attacks Targeting System Resources

Overview

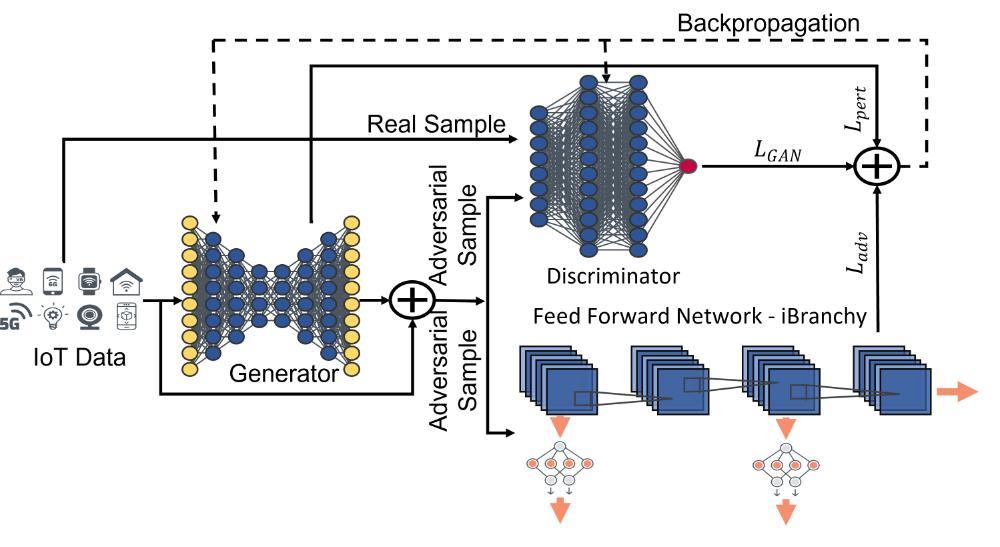

The popularity of edge-based IoT devices has surged in recent years. This is largely due to the growing demand for emerging applications such as remote health monitoring, augmented reality, and video analytics. These applications typically require low latency, low power consumption, and high efficiency, and often rely on machine learning models like Deep Neural Network (DNN) models. To meet these application requirements, the machine learning models must support faster inference and less computation. In response, Dynamic DNNs have been developed, which perform conditional computations and selectively activate portions of the network model for inference. These Dynamic DNNs have become crucial for edge-based applications, as they help save computational time and edge resources. However, our research has uncovered a vulnerability in Dynamic DNNs that we call Dynamic DNN Adversarial attacks (DDAS). Unlike traditional adversarial attacks, which focus solely on classification accuracy, our attack impacts the hosting edge-based IoT device resources like battery and latency. The goal of this project is to show that the DDAS attack is highly effective under various scenarios. Additionally, we also aim to investigate potential countermeasure methods against this novel type of attack and how to strengthen existing model against these attacks.

The primary goal of DDAS attacks is to interfere with the real-time execution of dynamic models by inducing the longest execution path, which depletes the highest computation resources. These attacks lead to a rise in the average inference time and power consumption of the targeted model, as the model requires extra computation to arrive at a classification decision. DDAS attacks typically result in reduced classification accuracy as they are considered adversarial attacks. However, the attacker's goals will determine whether a drop in classification accuracy is a desirable outcome or not.

One way an attacker may execute a DDAS attack is by installing malware on the target system that can listen to data sources like cameras used by the model. This malware would monitor and record input and output data to train the DDAS attack to create adversarial noise which will be fed back into the data feed creating an adversarial samples. These adversarial samples are designed to target the model and decrease its responsiveness.

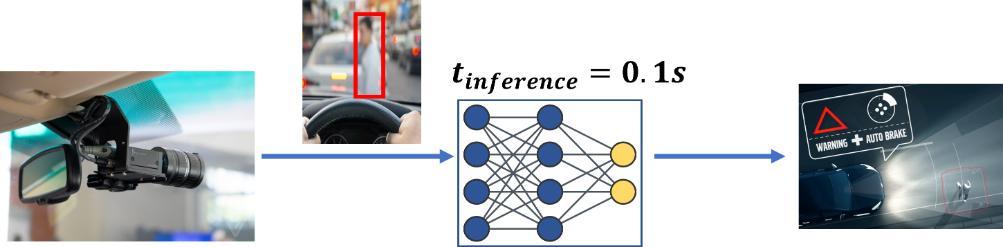

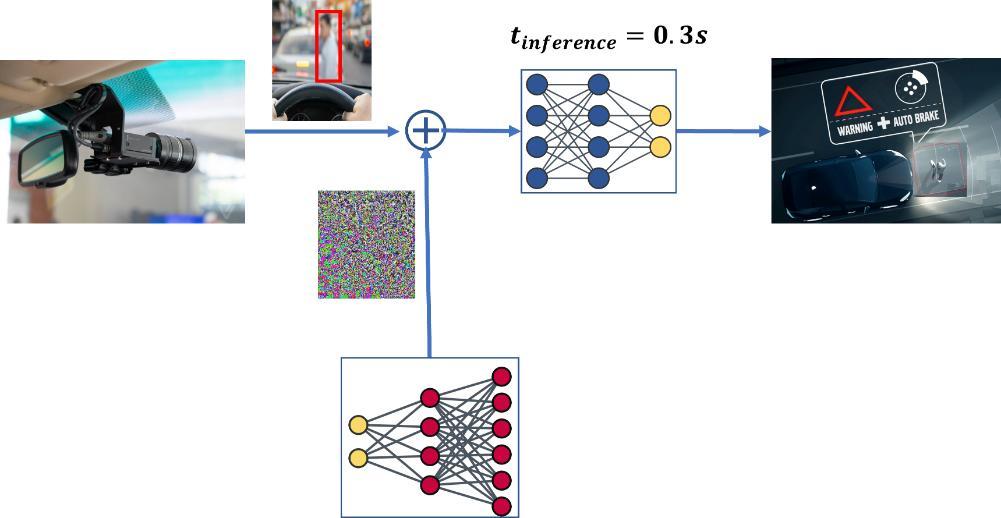

The vulnerability of autonomous vehicles to such attacks is high, given their dependence on rapid input processing to carry out crucial tasks such as traffic mapping and obstacle avoidance. Any lag in the system's ability to respond to these functions can have disastrous consequences, leading to traffic accidents. An example scenario of such an attack is shown in the Figure which showcases a DDAS attack targeting the autonomous braking system in an self-driving vehicle.

DDAS attack targeting obstacle avoidance system in an autonomous vehicle by tapping into the camera feed and adding adversarial noise increasing inference time and delaying the decision causing the vehicle to detect a pedestrian slower than the threshold needed for safely braking and causing an accident.

DDAS attack targeting obstacle avoidance system in an autonomous vehicle by tapping into the camera feed and adding adversarial noise increasing inference time and delaying the decision causing the vehicle to detect a pedestrian slower than the threshold needed for safely braking and causing an accident. In summary, DDAS attack have three goals: 1) to produce samples that closely resemble the original input, 2) to specifically exploit the early exit capability of dynamic models, and 3) to offer a means of regulating the effect on classification accuracy. We aim to showcase that such attack is achievable by modifying existing adversarial attacks.

Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.