ClassyNet - Class-Aware Early Exit Neural Networks for Edge Devices

Overview

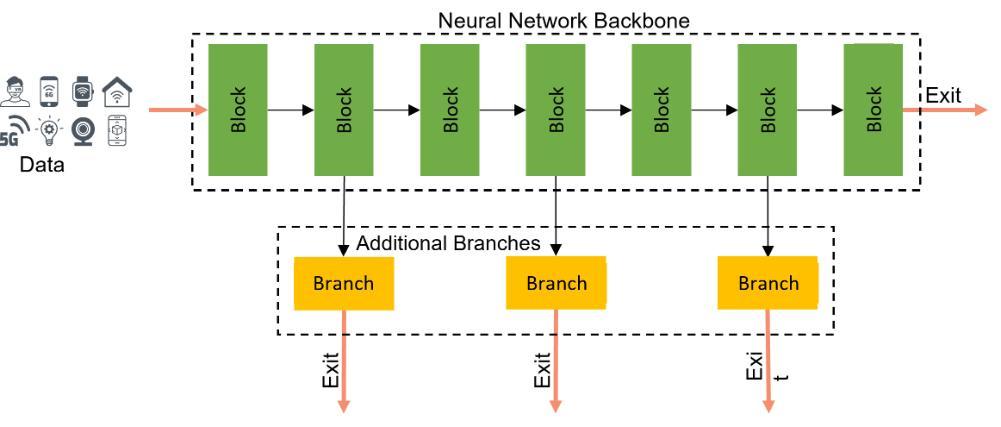

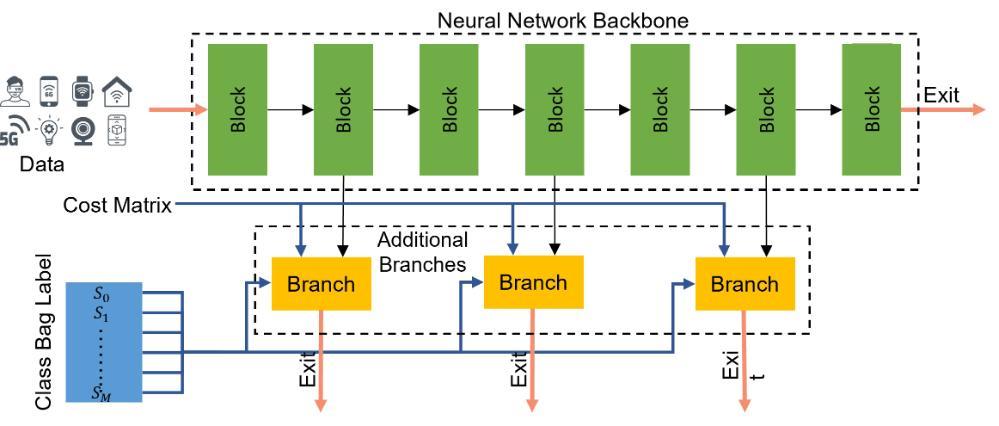

Edge-based and IoT devices have experienced phenomenal growth in recent years due to rapidly increasing demand in emerging applications that utilize machine learning models such as Deep Neural Network (DNN). However, one of DNN's main drawbacks lies in the large storage/memory requirement and high computational cost, which become a major challenge in adopting these models to edge devices. This led to the development of early-exit models such as BranchyNet that allow for the decision to be made on earlier stages by attaching dedicated exits to the inner layers of the architecture. However, existing early-exit models do not have control over what class should exit when. The need for these novel class-aware models can be observed in multiple edge applications where specific important classes need to be detected earlier due to their temporal importance. In this project, we aim to develop and design ClassyNet, the first early-exit architecture capable of returning only selected classes at each exit. This allows for speedups in inference time for sensitive classes by enabling the first layers to be deployed on edge devices, saving significant computational time and edge resources while maintaining high accuracy.

ClassyNet is a framework for class-aware dynamic early exit classification models. Class-aware models, especially when combined with model splitting, can provide several advantages, including pushing priority (important/sensitive) classes to early exits, which helps the model better achieve its operational goals by minimizing the inference path and hence the computation time. Moreover, with limited memory for edge devices, inputs from the priority classes will be processed using the partial model that is on-device and hence avoid the overhead of transmission. We aim to design and train models with exits placed at the very beginning of the network model that are capable of accurately classifying a substantial proportion of the targeted samples. As a result, we could build a neural network model using early exit techniques by maintaining only a small fraction of the model on the limited edge device's memory for early inference while sending the more challenging samples to the cloud.

Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.